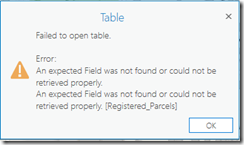

Recently I found an interesting issue while upgrading some of the ArcGIS Desktop arcpy scripts to ArcGIS Pro. I was expecting a smooth update by simply running the 2to3 tool. However, an error stops me. The script raises ‘An expected field was not found or retrieved properly’ while running Copy Features tool.

Most of the answers from google were about invalid field names in SQL expression, which means there is at least one join on the source layer. However, in my case, although there was a join, it was later dropped before Copy Feature. I then manually repeated the procedure in ArcGIS Pro and found the same error happened after Remove Join during opening the attributes table of the layer.

I was think what can possibly be wrong. My logic was simple in the script as presented in the following.

- Creating a table using Frequency tool.

- Creating a table view using Make Table View tool with where clause.

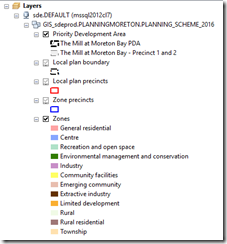

- Creating a feature layer using Make Feature layer tool without any where clause applied.

- Join the feature layer to the table view, join type is KEEP_COMMON.

- Select all by using Select Layer by Attribute, where clause “1=1”.

- Remove join.

- Copy Feature.

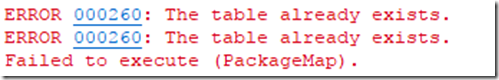

The issue happens after remove join obviously. I used arcpy.da.Describe() to inspect the layer and eventually found the issue. The whereClause of the layer is not None. It seems it get the where clause from the Add Join tool but does not remove it while the join is dropped. However, it works fine in ArcMap.

Solution? It is easy enough to get a workaround. Instead of creating a Table View, exporting the table to another table with the same where clause. Then use the new table in the rest of the steps.

The versions of ArcMap and ArcGIS Pro I used in the testing are 10.6.1 and 2.2.4 respectively.